Psychology-Based Tasks Expose Limits in AI Visual Cognition

The field of Artificial Intelligence has made incredible strides, with models now capable of performing tasks that once seemed exclusively human. But do these models truly “think” and analyze information in the same way we do in psychological way? A recent study delves into this fascinating question, revealing the current limitations of multi-modal Large Language Models (LLMs) in grasping complex visual cognition tasks.

Assessing Human-Like Understanding in AI

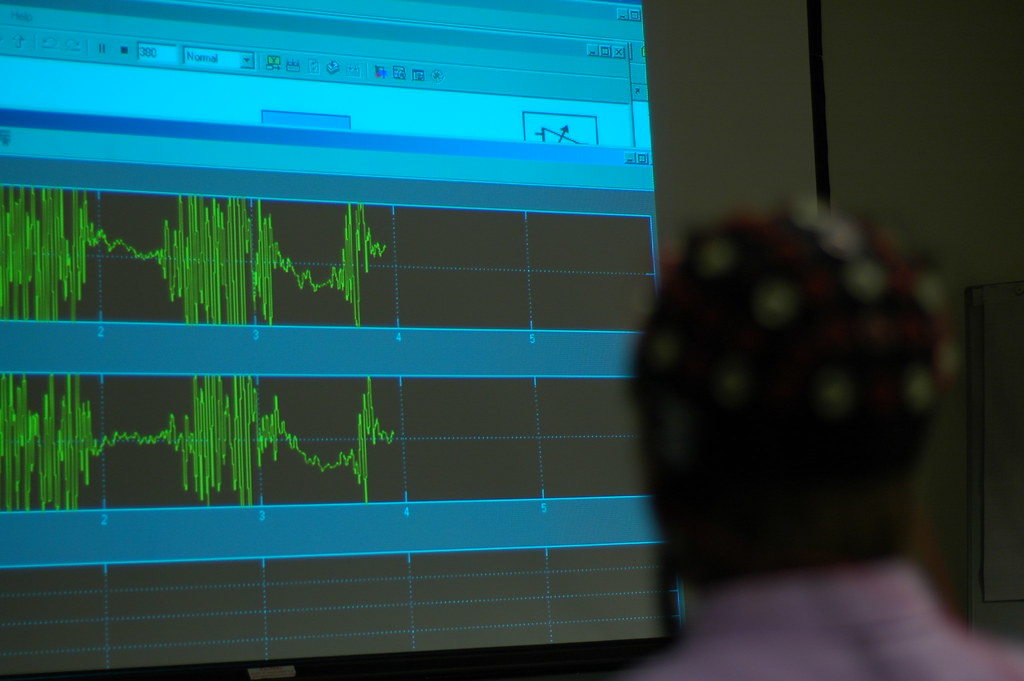

Researchers from the Max Planck Institute for Biological Cybernetics, the Institute for Human-Centered AI at Helmholtz Munich, and the University of Tubingen, sought to understand how well LLMs grasp intricate interactions and relationships in visual cognition. Their work, published in Nature Machine Intelligence, highlights that while these models can process and interpret data effectively, they often struggle with nuances that humans easily understand. The research was inspired by a paper by Brenden M. Lake et al., which outlined key cognitive components needed for machine learning models to be considered human-like. The core of the study involved testing multi-modal LLMs on tasks derived from psychology studies, an approach pioneered by Marcel Binz and Eric Schulz in PNAS. These tasks were designed to evaluate the models’ understanding of:

- Intuitive Physics: Can the models determine if a tower of blocks is stable?

- Causal Relationships: Can the models infer relationships between events?

- Intuitive Psychology: Can the models understand the preferences of others?

By comparing the responses of LLMs to those of human participants, the researchers gained insights into the areas where models align with human cognition and where they fall short.

The Results: Impressive but Incomplete

The study revealed that while some LLMs are proficient at processing basic visual data, they struggle to emulate more intricate aspects of human cognition. This raises important questions about the future development of AI:

- Can these limitations be overcome by simply scaling up models and increasing the diversity of training data?

- Or do these models need to be equipped with specific inductive biases, such as a built-in physics engine, to achieve a more robust understanding of the physical world?

Implications for the Future of AI

This research has significant implications for the future of AI development and its applications in various fields. Understanding the limitations of current models is crucial for:

- Developing more human-like AI: By identifying the gaps in cognitive abilities, researchers can focus on developing models that more closely mimic human reasoning and understanding.

- Improving AI applications in real-world scenarios: Recognizing the limitations of AI in complex tasks can help developers create more reliable and effective applications in fields such as robotics, healthcare, and education.

The researchers plan to extend their work by testing models that have been fine-tuned on the same tasks used in the experiments. While early results show improvements in specific tasks, it remains to be seen whether these improvements translate to a broader, more generalized understanding, a skill that humans excel at.

The Bigger Picture

This study underscores the importance of interdisciplinary research, combining insights from computer science and psychology to advance our understanding of intelligence, both artificial and human. As AI continues to evolve, it is crucial to critically evaluate its capabilities and limitations to ensure that it is developed and applied responsibly.